Humans, Agents, and the Future of Work

What if the real disruption from AI isn’t just automation, but communication?

That's the quiet yet important argument at the heart of a new paper from Stanford released last week. Titled with “Future of Work with AI Agents: Auditing Automation and Augmentation Potential across the U.S. Workforce.”, Shao et al. don’t just hype job losses or gains. Instead, they introduce something we rarely see in AI-and-labor research: a structured, data-driven audit of misalignment, precisely, between what workers want AI to do, and what current agents can actually deliver.

Want vs. Can

The authors surveyed over 1,500 U.S. workers across 104 occupations, using an audio-enhanced interview protocol and the O*NET dataset to break jobs into 844 tasks. Each worker then marked those tasks as preferred for automation, augmentation, or no AI involvement at all. However, they did so, not just once, but via a new shared framework: the Human Agency Scale (HAS).

At the same time, AI experts separately assessed whether today’s LLM-based agent systems could realistically perform each task. It’s an unusually rich double audit: one vertical (workers' preferences), one horizontal (model capabilities), joined into a single database: WORKBank.

Four Zones of Alignment

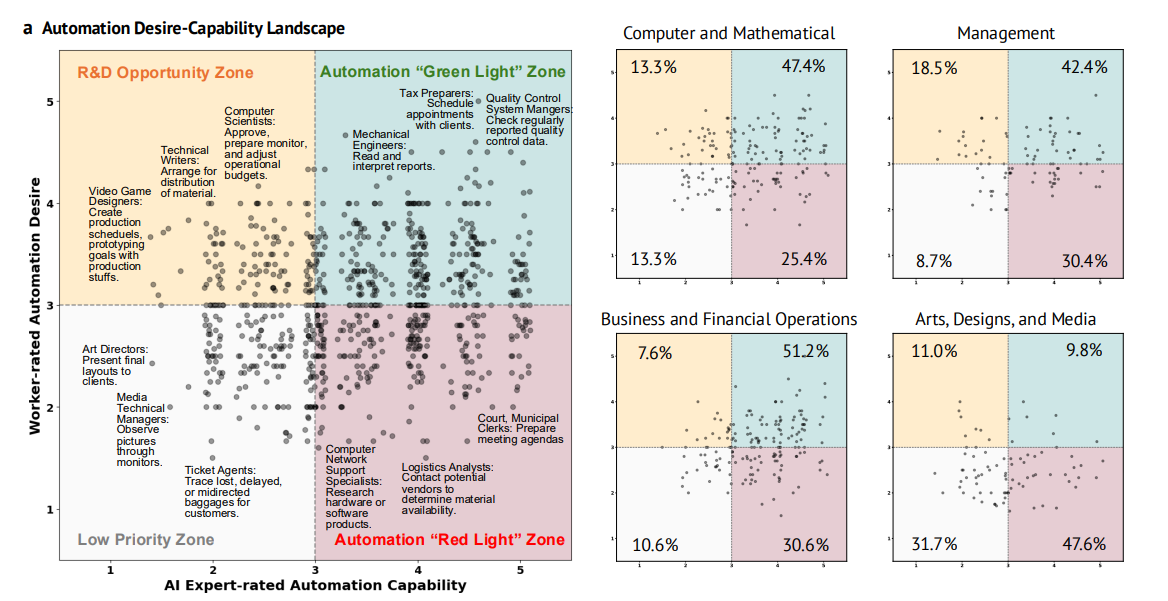

The result is a new kind of AI map that divides labor into four color-coded zones:

🟢 Green Light: Workers want AI, and AI can handle it

🔴 Red Light: Workers want AI off, and AI isn't reliable anyway

🟡 R&D Opportunity: Workers want help, but the tech isn't ready yet

⚪ Low Priority: AI can help, but workers don't want it

What you see here is not just a heat map, but statistical diagnostic. It’s a snapshot of misalignment at scale, between what we hope AI will do for us, and what it’s actually doing.

Complexity in Disguise

If Apple’s “Illusion of Thinking” paper showed that LLMs collapse under cognitive load, this paper shows that humans collapse under design fantasies. We assume our jobs can be neatly split into modules, easily handed off to AI. But human work is messy. Tasks blend into one another. Judgment isn’t modular. You can’t outsource 40% of nursing or journalism or teaching and expect clean results.

Even more revealing: humans most want AI to tackle cognitively demanding, interpersonally sensitive, or emotionally demanding tasks. Think performance reviews. Strategy decks. Conflict mediation. But those are precisely the kinds of tasks LLM agents are worst at.

A Framework That Asks the Right Questions

To their credit, Shao et al. try to avoid techno-utopianism or hand-waving. The audit method, though not without flaws, is thoughtfully constructed. The use of the Human Agency Scale (HAS) offers a rare shared language for mapping how much involvement humans want in a given task, not just whether they want AI involved or not.

Still, there are soft spots. Expert assessments are subjective. Inter-rater reliability isn’t discussed. Task capability is treated as stable, despite rapid model advances and context dependence. And the paper sidesteps hard questions: is model failure due to architecture, interface, or prompt design? Nonetheless, the paper is more than a well-timed provocation. In a landscape where most “AI for work” papers stop at job-level predictions, this one drills deeper. More provocatively: the paper suggests that AI may not erode jobs so much as reshape expectations. Workers will demand AI support in precisely the domains where agents are least trustworthy. That’s not a risk of job loss. That’s a risk of cognitive overreach.